But clearly, this blog is still here, and so am I. I should write more often. Is this thing on?

July 31, 2025

April 1, 2023

Once Upon a Tome: The Misadventures of a Rare Bookseller by Oliver Darkshire

A few days ago my beloved son, after asking how my day was and getting my customary laconic reply, said “you know, Dad, it’s OK to say more than a couple of words”. After careful consideration, I realized I might have gotten used to silence and inner reflection to a degree that is perhaps less than useful. I could, perhaps, benefit to some small extent from fleshing out and airing some of those thoughts, ideas, flashes of inspiration, and plain silliness that cavort around in my skull all the time.

So with that in mind, let’s try and awake from its slumber—raise from the dead?—a muscle I rarely use noways. Writing the occasional non-technical bit, using more than a couple of words or 140 characters. To wit, a book report.

I recently finished reading “Once Upon a Tome: The Misadventures of a Rare Bookseller“, by Oliver Darkshire. This was a delightful book, albeit not quite what I expected.

Last year, when we were last in London, I made a point of visiting Sotheran’s, a rare book store. This was partly because I love book stores, especially of the rare or esoteric kind, and partly because I had a thin sliver of hope we would meet Oliver Darkshire. Oliver, you see, was writing the store’s twitter feed at the time, and it was one of the best things the Internet had to offer, bar none. I was hoping to run into him and tell him how much I enjoyed it. That is not my usual position, a gushing fan boy, but there you have it, the twitter feed was just that good.

The store’s twitter feed at the time was snarky, quirky, full of unexpected humor and often poignant. The book, is … the same, I guess? Except it’s also raw, contemplative, and maybe a little sad. I’d be hard pressed to say exactly where the sadness comes from; perhaps it is because I got the sense that Oliver constantly measures himself and comes away wanting. It took me longer than usual to read it, because every few pages I’d put it down. It’s a book not to be devoured but rather consumed in small doses.

The book is autobiographical in nature, covering Oliver’s path from a young apprentice to an accomplished bookseller. It is composed of many short vignettes covering all sort of things: the different kinds of customers you get at a rare book shop, the different kinds of characters who sell rare books for a living, the practicalities of buying and selling books, a slice of London life, and many strange happenings. Perhaps “The Education of a Rare Bookseller” would have been a more accurate title, if less catchy.

My favorite part may have been the trip to acquire books out in the country. Lacking a driver’s license, Oliver takes the train and then ambles through the country side to the old manor house, where weirdness reigns. Having taken my share of walks through the English country side to old manor houses, I could feel the experience in near visceral detail.

There’s a lot more in the book but I would like to avoid spoilers. Suffice to say that if you love books, by all means, read this one. Just don’t expect razzle and dazzle; it’s a slice of every day life, full of hope, books, a bit of angst, and ghosts. Must not forget the ghosts.

August 24, 2022

The Great UK Operation: Day 4, Thu, July 14th, 2022

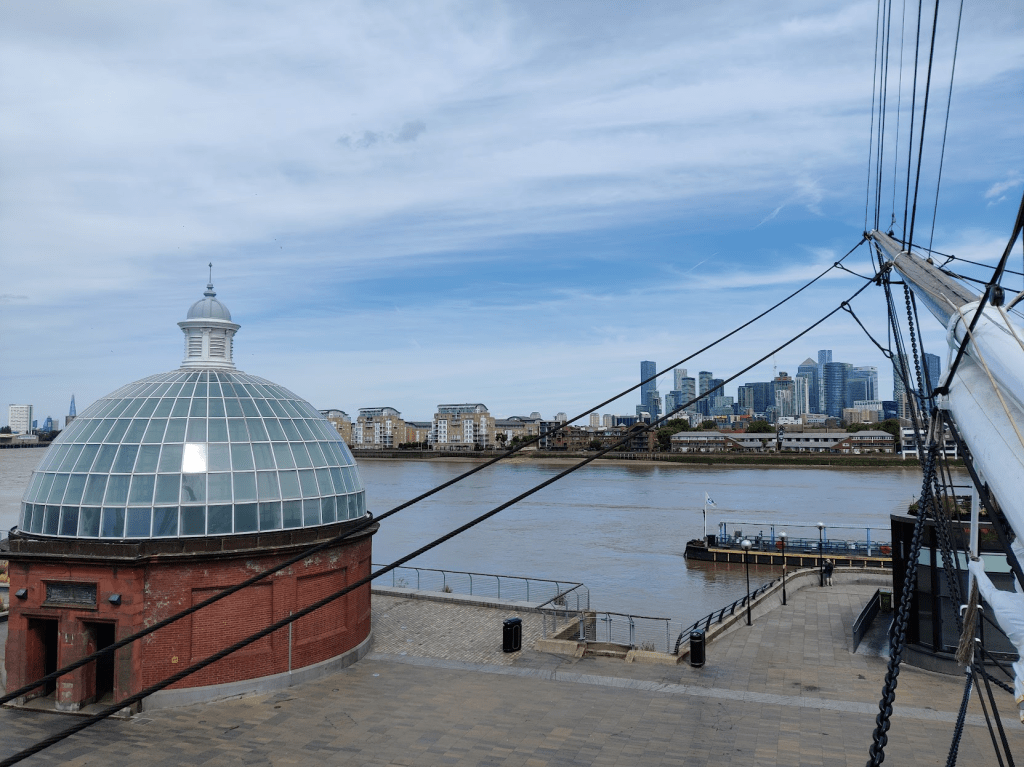

תחבורה היא השאלה. מעבורת על הנהר היא התשובה. מנמל צ’לסי לגריניץ לקאטי סארק, פעם סירת המפרש המהירה בעולם. יש משהו עצוב בסירה נעוצה במקומה על היבשה. יעל עצמאית. זאב מאוכזב שאי אפשר לטפס במעלה התורן. אמא מתמוגגת מהסירה. אבא נזכר במלחמות האופיום של ילדותו. מהסירה לשוק גריניץ’. צהריים בפאב. לקינוח פאדג’ במגוון שפות. אבא עייף. כסא נוח ולישון. מהשוק למוזיאון הימי. יעל מתחת לעץ. אבא ישן במזגן. זאביק מצייר את מפת זאבוניה. כבר ארבע אחר הצהריים ובערב יש הצגה. חזרה לנוח או שנסחוב? סוחבים. מעבורת על הנהר לסוהו. נחילי אדם. אין מקום באף מסעדה. יעלי מציעה שנכנס לבר אבוקדו. מסתבר שנועה ארבל מהשכבה עפה על זה באינסטגרם. המבורגר עם אבוקדו, לובסטר עם אבוקדו, גוואקמולי עם אבוקדו, סמות’י אבוקדו, וויפי עם אבוקדו, חשבון עם אבוקדו. קדימה לתיאטרון למלכודת העכברים. תיאטרון של פעם. מחזה מפעם. בית הארחה. סופת שלגים. המארחת, בעלה, הגברת, הארכיטקט, המייג’ור, השוטר. מי הרוצח? לא אנחנו. הפסקה. קרשנדו. זה לא מי שאתם חושבים. חזרה לדירה. המקרר חזר לעבוד בעזרתם האדיבה של סעידה ובעלה. מסתבר שהוא עובד על חשמל ומישהו כיבה את השקע. קפיצה מהירה לקואופ לפני שסוגרים. הסורג יורד. גם המסך. לילה טוב.

The question is transportation. The answer is a river boat. From Chelsea to Greenwich to the Cutty Sark. Once the world’s fastest tea clipper. There’s something sad about a boat forever docked on land. Yaeli exploring on her own. Zeevik is disappointed he cannot climb the main mast. Mom enjoys the boat. Dad remember his childhood’s opium wars. From the boat to Greenwich market. Lunch at the pub. For desert, fudge in a myriad of languages. Dad is tired. A comfortable armchair, let’s get some sleep. From the market to the maritime museum. Yaeli settles under a tree. Dad sleeps inside in the AC. Zeevik draws the map of Zeevonia. It’s 4PM already and we have a show in the evening. Back for some rest or shall we carry on? Carrying on. The river boat back to Soho. Throngs of humanity. Restaurants are jam packed. Yaeli suggests we try the Avocado bar. Apparently Noa Arbel gave it a thumbs up on Instagram. Avocado hamburgers, Avocado lobsters, Avocado guacamole, Avocado smoothies, Avocado wifi, Avocado bill. Onwards to the theater. The Mousetrap awaits. A grand theater from long ago. A show to match. A guest house. A snow storm. The hostess, her husband, the lady, the architect, the major, the policeman. Who is the murderer? Not us. Intermission. Crescendo. It’s not who you think. Back to the airbnb. The fridge works again thanks to Saida and her husband’s help. Apparently, it works on electricity and someone turned the socket off. A quick hop to the co-op before it closes. The shutters go down. So does the curtain. Good night.

August 11, 2022

The Great UK Operation: Day 3, Wed, July 13th, 2022

התחלנו את הבוקר בחדרי המלחמה של צ’רצ’יל. יעלי התחברה לדרמה העולמית. זאביק המתין בסבלנות. אהבנו במיוחד את החדר על צ’רצ’יל במזרח התיכון. גם אנחנו היינו פעם שלוחה זוטרה של האימפריה. מגמלים עברנו לברווזים בפארק סט. ג’יימס. התפצלות. אמא וזאביק לטייט בריטניה. זאביק צועד קדימה וסופר לאחור. זאביק רוצה הפעלה לילדים. אמא רוצה טרנר ורותקו. מאבק כוחות שסופו ידוע מראש. הטייט היה מעולה. הזוועה של הטייט לא תחזור כל עצמה. מסתדרים. זאביק גווע ברעב. לאמא אין אובר. קדימה לפאב. ההמבורגר נטרף. לא היה לו סיכוי. רוח הרפאים הביתית מאשרת. יום בלי לקנות לגו זה לא יום. טוב שהיו חסכונות. עברנו למדוד נפח לגו בקוב. אובר לדירה. הדירה גידלה בריכה. תודה למקרר.

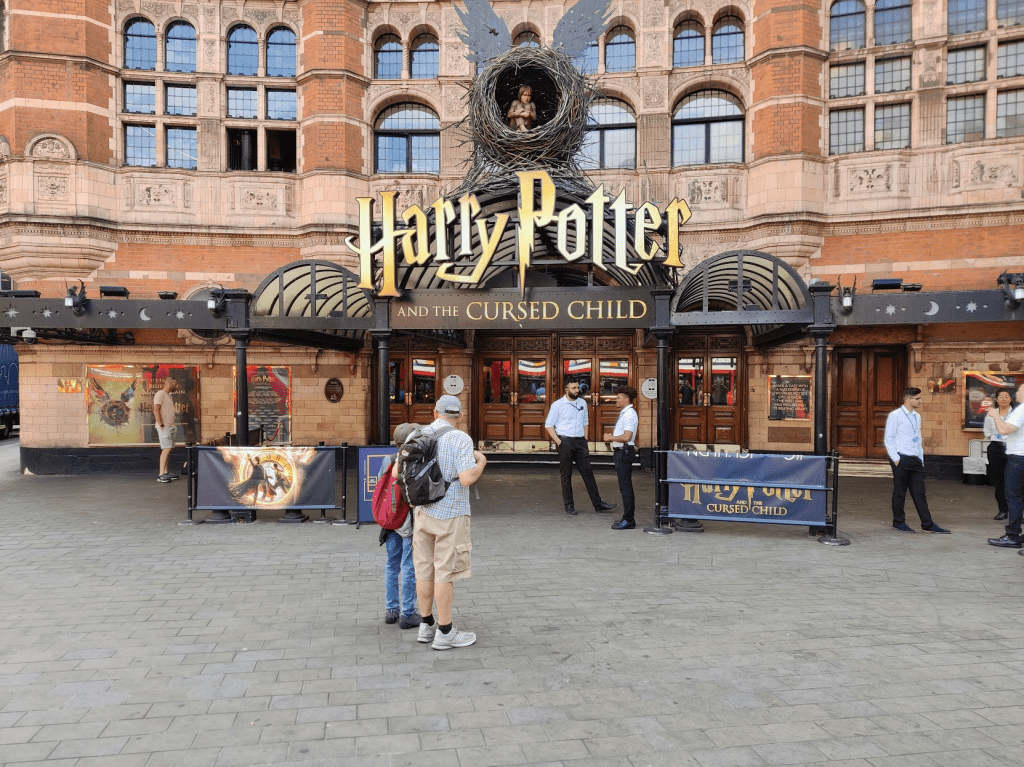

אבא ויעל אצל הילד המקולל. מחזה בשני נצחים שממלא אולמות כבר שנים. חלום של יעלי מאז שהמחזה יצא. תמיד נחמד להגשים חלומות. גם אבא מגשים חלום. מוקה איטלקי, כורסא נוחה ומזגן עוצמתי. אחרי שעתיים וחצי יעלי ריחפה החוצה מהחלק הראשון. ארוחת ערב וחזרה לחלק שני. בית הקפה נסגר. יש אחרים. גם הם נסגרים. יש כיסא על המדרכה. כל אומות העולם צועדות בסך. בתשע וחצי יעלי מרחפת החוצה מהחצי השני. ההצגה היתה מעולה. השחקנים טעו פעמיים. לא טועים בדברי אלוהים חיים. זאביק ואמא מזמן במיטה. הקרב עם המקרר טרם הוכרע. הפשרה קטנה בשעת לילה זעירה. צועדים מסוהו חזרה לצ’לסי. 7 ק”מ של לונדון לילית. הוד, הדר, תהילה דהויה. שיכורים מחפשים ריגושים. אנחנו מחפשים למיטה. הגענו. המקרר עדיין לא עובד. זה יחכה למחר.

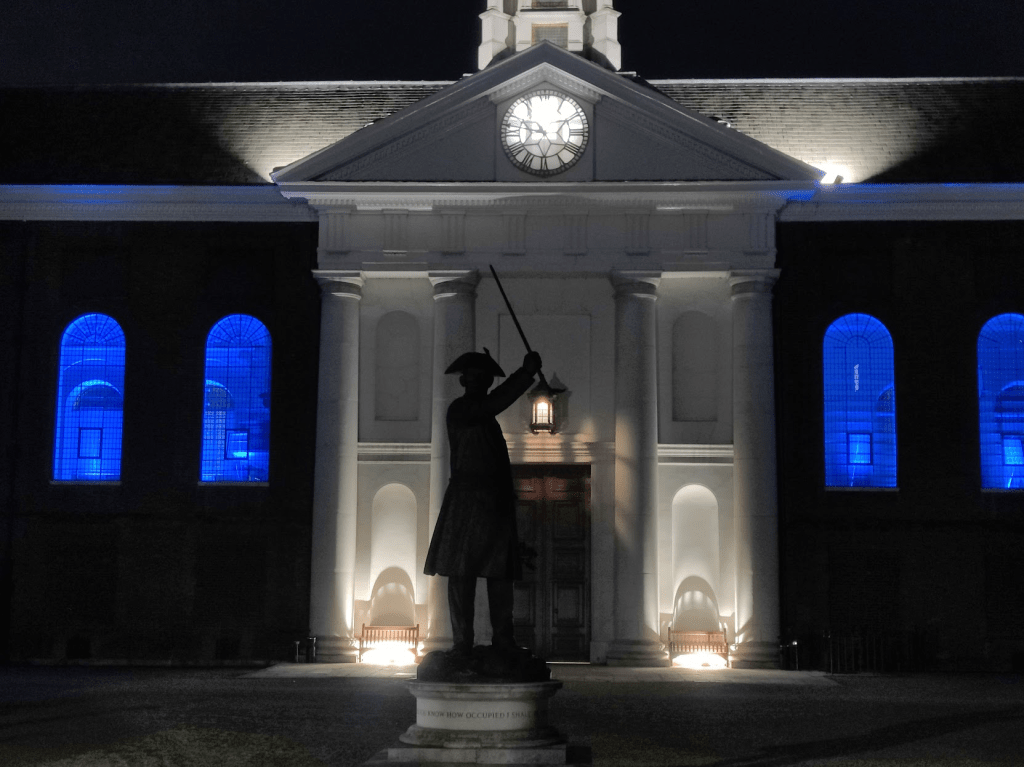

We started started the day at The Churchill War Rooms. Yaeli vibed with the world drama. Zeevik waited patiently. We were particularly fond of the “Churchill in the Middle East” exhibition. We, too, were once a faraway outpost of the empire. From camels we moved on to ducks at St. James’s. Splitting up. Mom and Zeevik to the Tate Britain. Zeevik moves forward and counts backwards. Zeevik wants to do “kid stuff”. Mom wants Turner and Rothko. A struggle, its outcome preordained. The Tate was awesome. The Tate was horrible, never again. Equilibrium is reached. Zeevik is starving. Mom cannot get an uber. Let’s go to the pub. The hamburger is devoured, it did not stand a chance. The pub’s local ghost approves. A day without new Legos is not a good day. It’s good we had some savings. We now measure Legos acquired by volume. Uber to the airbnb. The airbnb has grown a pool. The fridge be thanked.

Meanwhile, Dad and Yael are at Harry Potter and the Cursed Child. A show in two eternities that has been filling theater halls for years. Yaeli’s dream since it came out. It’s always nice to make dreams come true. Dad is also having a dream come true while Yael enjoys the show: Italian coffee, a comfortable arm chair, and a powerful AC. After two and a half hours Yaeli comes floating out of the first part of the show. Dinner and back for part two. The coffee shop is closed. There are others. They close too. There’s a chair on the sidewalk. Every nation of the world passes by. At nine thirty PM Yaeli floats out of part two. The show was awesome. The actors were wrong twice. You do not meddle with the words of God. Zeevik and mom have been asleep for awhile. The battle with the fridge rages on. A small defrosting in the wee hours of the night. We walk back from Soho to Chelsea. Seven kilometers of late night London. Majestic, faded glory. Drunks looking for thrills. We look forward to getting into bed. We have arrived. The fridge still does not work. It will wait for tomorrow.

August 9, 2022

The Great UK Operation: Day 2, July 12th, 2022

קמנו במלון אחרי פחות מדי שעות שינה אבל מי סופר. ארוחת בוקר אנגלית קלאסית. ירקות זה לחלשים, האימפריה צועדת על בייקון, נקניקיות ושעועית. קדימה ללונדון רבתי! שעה וחצי באובר והגענו. הדירה שמצאנו באיירבנב בצ’לסי, בשיכונים. בלונדון אפילו לשיכונים יש שמות מספר של דיקנס. World’s Ends Estate. הדירה חביבה, יש מקום לכולם, אין מזגן. למארחת האלבנית קוראים לומטורי, שזה בעברית סעידה. המשפחה שלה חסידי אומות העולם. יש הרבה ספרים ומדף שלם של קוראן. לפתוח את המים החמים במקלחת פותחים את ברז החמים בכיור, כי למה לא. יש גם משהו לגבי הסוויץ’ של האור במקלחת אבל לא עקבתי. נסתדר. (לא הסתדרנו). משאירים את המזוודות ויוצאים. לונדון מחכה לנו.

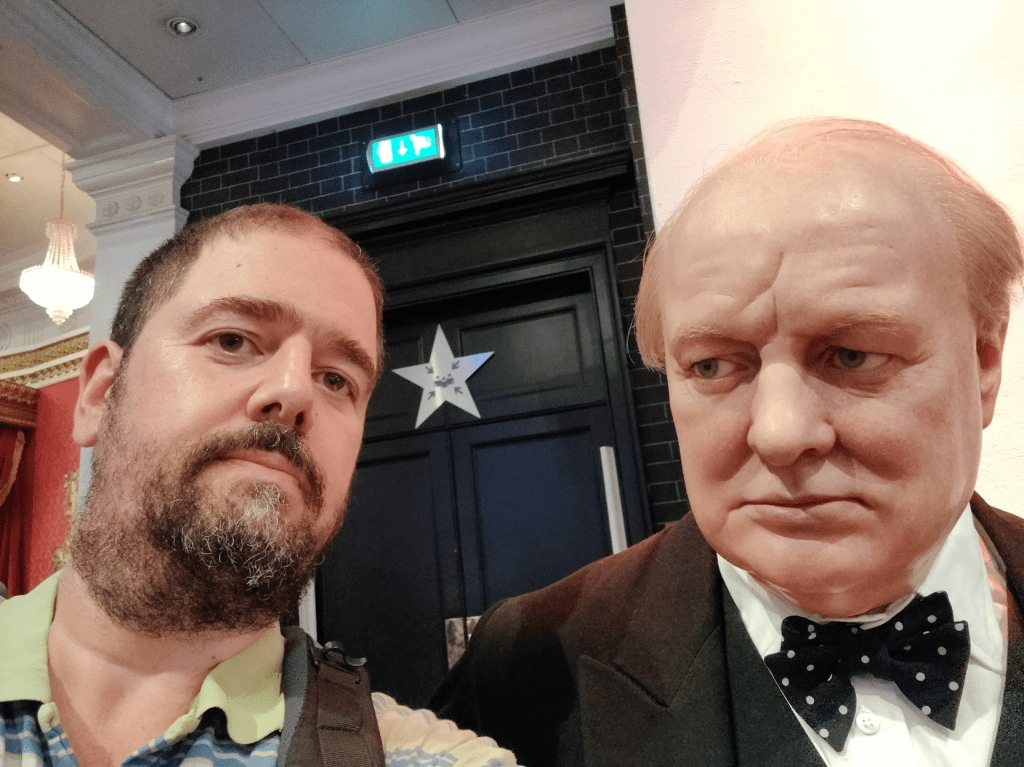

תחנה ראשונה: חנות הלגו ב- Leicester Square. החנות בשיפוצים. קנינו ממתקים במקום. קדימה לחנות ספרים. Waterstones. חמש קומות. אפשר לעבור לגור פה. יעלי בגן עדן. אבא אמא וזאביק ממשיכים ל- Sotheran’s, ספרים עתיקים, כרזות עתיקות, ורוחות רפאים. אבא מתרגש לפגוש את אוליבר, מוכר ספרים ואושיית טוויטר. הלאה למדאם טוסו. ברחובות חם, לח, ומאובק. הגשם הלונדוני בשביתה. רצים להספיק להכנס בזמן. נכנסים בכניסה של הביוקר עם כרטיסים של הבזול ואיכשהו אף אחד לא שם לב. אנחנו בפנים, קדימה להצטלם. קרח לאסקימוסים ותמונות עם בובות לשאר העולם. הילדים מתרגשים וזה מה שחשוב.

צהריים מאוחרים ומנוחה לרגליים בטאקו בל. זאביק מסמן וי. קדימה להמליז, חנות הצעצועים הגדולה. חמש קומות. ילדים צווחים וסניצ’ים מעופפים. קדימה לחנויות עתיקות. מפות עתיקות. סטפני המוכרת חביבה להפליא ונותנת לנו סקירה מקיפה על התעשיה. היינו קונים מפה אבל יש יותר מדי אפסים במחיר. המפות חיכו 400 שנה, יחכו עוד קצת. עוד חנות עתיקות. עצירת מנוחה בפארק מול גזע שחווה את עלייתה ושקיעתה של האימפריה. יין וגבינות בשוק חביב בפינת רחוב לארוחת ערב. השולחן באלכסון והפרימיטיבו מטייל עליו. זה הוא, לא אנחנו (שיכורים). קניות בקו אופ לארוחת בוקר מחר ולדירה. האור במקלחת לא עובד. נתקלח בחושך? סעידה קופצת לסדר. לא דוחפים, לא מושכים, הסוויץ’ שבור אבל אם מכים בו קלות באצבע צרדה האור נדלק. קסם. חם ומזגן איין. רוח זה המזגן החדש. ויהי לילה, יום ראשון בלונדון עבר.

We woke up at the hotel after not enough sleep, but who’s counting. A classic English breakfast. Veggies are for the weak, the empire marches on bacon, sausages, and beans. Onwards to the city! An hour and a half in an Uber and we’re there. Our airbnb apartment is in a Chelsea estate. In London even estates have names from Dickens stories. World’s Ends Estate. The airbnb is neat, there’s room for everyone, but there’s no AC. Our Albanian host is called Lumturie, which in Hebrew would be Saida. Her family are Righteous Among the Nations. There are plenty of books and a whole shelf for the Quran. To run the hot water in the shower you run the hot water in the sink first, because why not. There was also something about the light switch in the shower, but I did not follow. We’ll manage. (We did not manage). We leave our suitcases behind and head out. London is waiting for us.

First stop: the Leicester Square Lego shop. The shop is undergoing renovations. We bought sweets instead. Onwards to Waterstones. Five floors. You could move in in here. Yaeli has died and gone to heaven. Mom, Dad, and Zeevik continue to Sotheran’s, antique books, antique posters, and ghosts. Dad is excited to meet Oliver, book seller extraordinaire and twitter personality. Onwards to Madame Tussauds. The streets are hot, humid, and dusty. The London drizzle is on a strike. We run to make it in during our allotted time slot. We enter through the expensive entrance while holding the cheap tickets and somehow no one notices. We’re in, let’s take photos. Ice for eskimos and selfies with mannequins for the rest of the world. The kids are happy and excited and that’s really all that matters.

A late lunch and rest for our aching feet at a Taco Bell. Zeevik crosses another item off the list. Onwards to Hamleys, the large toy store. Five floors. Screaming kids and flying snitches. Onwards to the antique shops. Ancient maps. Stephanie the sales person is very kind and gives us a comprehensive overview of the antique maps industry. We would’ve bought a map but there were too many zeros in the price tag. The maps have waited for 400 years, they can wait a bit longer. Another antiques shop. A short stop to rest at the park in front of a tree that has seen the rise and decline of the empire. Wine and cheese at a nice corner market for dinner. The table is slanted and the Primitivo goes a-walkin’. It’s the table not us. (Drunk). Shopping at the coop for breakfast tomorrow and then back to the apartment. The light switch in the shower does not work. Shower in the dark? Saida stops by to fix it. You don’t push, you don’t pull, the switch is broken but nudge it just right and the light comes on. Magic. It’s hot and there’s no AC. Sea breeze is the new AC. Let there be night, first day in London is over.

August 7, 2022

The Great UK Operation: Day 1, July 11th, 2022

היום הגדול הגיע יום אחד מוקדם מהמתוכנן. האדם מתכנן ואיזיג’ט מבטלת את הטיסה, אז טסים יום קודם, אנגליה מחכה לנו. סגרנו הכל בעבודה, ארזנו מזוודות. אבא, מזוודה קטנה מלאה. אמא, מזוודה קטנה עם מקום לעתיקות. יעלי, 19 ק”ג של סטייל. זאביק, “זה בסדר אם לא אחליף בגדים שלושה שבועות? פשוט צריך מקום ללגו שאקנה”. אפסנו את הכלבים ויצאנו לשדה. שעתיים בתורים אבל מי סופר. שלוש שעות בגייט אבל מי סופר. הטיסה המריאה בחצי שעה איחור אבל מי סופר. המראנו! מחלקת קורונה זה פה. מי שיכל, ישן, מי שלא, ספר את הדקות וניסה למצוא תנוחה נוחה. אין כזו, לא שילמנו מספיק. נחתנו! אחת בלילה. מזוודה אחת הגיעה. שנייה הגיעה. שלישית הגיעה. רביעית, אין. יעלי בלחץ. מחכים. מחכים. מחכים. יש רביעיה! קדימה למלון. שתיים בלילה, מי סופר. אוטובוס או ברגל? אמור להיות קרוב. יאללה, טיול לילי רגלי בשדה התעופה. צועדים צועדים צועדים. הנה המלון. משום מה אין אור דולק, פנסי הרחוב והשלט של המלון כבויים. הרוח מייללת. “אבא, לא אמרת שישנים במלון רדוף רוחות.” אין איש בקבלה אבל יש חדרים. שלוש בלילה ולמיטה, אבל מי סופר.

The big day has arrived, one day earlier than planned. Man plans and Easyjet cancels their flight. So we flew one day earlier. The UK is waiting. We wrapped up things at work, packed our suitcases. Dad, a small but full suitcase. Mom, a small suitcase with plenty of room for antiques. Yaeli, 19 kgs of style. Zeevik, “is it OK if I wear the same thing for three weeks? I just need to leave room for all of the Lego I will buy”. The dogs are off to the doggy pension and we are off to the airport. Two hours in checkin and security, but who’s counting. Three hours at the gate, but who’s counting. The flight will take off thirty minutes late, but who’s counting. Takeoff! COVID ward is here. Those who can, sleep, those who cannot, count the minutes and try to find a comfortable posture. Can’t find any, we did not pay enough for it. We have landed! It’s one AM. One suitcase arrives. Second. Third. Fourth—no go. Yaeli is nervous. Waiting. Waiting. Waiting. We have a whole set of luggage! Off to the hotel. Two AM, but who’s counting. Airport shuttle or on foot? It’s supposed to be close by. YOLO, a midnight walk through the airport. Walking walking walking. Here’s the hotel. For some reason the street lights are off and the hotel sign and lights are off. The wind whistles. “Dad, you didn’t say we are sleeping at a haunted hotel”. There’s no one in reception but there are rooms. Three AM and off to bed, but who’s counting.

March 4, 2019

Data center vignettes #5

It’s Monday. The rain drums on the roof. You are worried about next week’s upgrade to the storage servers. They are struggling. Linux, bless its little heart, is not keeping up with the new NVMe SSDs. With RAID5 and compression, it’s slower than a three legged turtle. The plan is to put in stronger CPUs and more RAM across the entire storage server fleet.

You sit up straight. An idea has just occurred to you. This could be big. Really big. You know how no one does machine learning in software anymore? How the big cloud guys build custom ASICs? They do it because at scale, a 20% reduction in CPU utilization is huge.

What if you could achieve the same efficiency as the big guys for your storage servers? What if there was a way to accelerate in hardware common storage operations and offload them from the CPU? The performance improvements would be nice, but the TCO savings, beginning with avoiding that messy data center wide upgrade next week — that’s going to be huge. It will delight your boss. And your CFO.

As the rain continues drumming, you realize that you don’t need to build the storage accelerator. Lightbits already built it. You put that upgrade on hold and give them a call to order a batch of LightFields. Your day just got a whole lot better. Even the rain has stopped.

March 2, 2019

Data center vignettes #4

It’s late on a clear and cold Saturday night. You had a few with friends at the pub and now you’re heading home. You can’t wait to get back and snuggle with the cats. And then the email comes in.

“We have a problem. There’s something wrong with the database clusters’ latencies. I’m not sure what’s going on but the tail keeps rising. If this continues for much longer, we are going to be in violation of our SLAs and the brown stuff will hit the fan. Can you take an urgent look?”

Sigh, the cats will have to wait. Good thing you didn’t go for that last round at the pub. Let’s see. The database clusters look OK, no nodes have failed recently, CPU utilization is OK, query processing time within acceptable bounds, what the hell is going on?

And then you see it. Some of the new batch of SSDs are failing on some of the nodes. When they fail, Linux resets them and they come back until they fail again. They have been jittering for the last few hours, slowing down the nodes they’re on. And every time an SSD fails, the latency on that node spikes up, bringing the cluster’s entire tail latency up. It’s either kill those nodes, reducing capacity to a dangerous level, or make a midnight trip to the data center and take care of those drives.

As you navigate the quiet and empty streets on the way to the data center, it occurs to you. Drives have always failed and will continue failing. What you need is for those drives to just fail in place while everything continues working, no slowdown, no tail latency increase. Then you could be home with the cats right now. You keep driving.

At home, if anyone were listening, they might or might not hear the cats quietly meowing “LightOS… use LightOS. From Lightbits. Coming soon.”

March 1, 2019

Data center vignettes #3

It’s Friday. You’ve been hacking on this cool bit of code for awhile. It will be such a pleasure to deploy and see the user engagement numbers go up. The CI is green. The code is tight. You take a deep breath and deploy.

Ten minutes later, everything is fine. Ten minutes after that, still good. An hour passes. You check out the Grafana dashboard, and everything looks OK, except… why does the size of one of your key data stores continually increase with the new code?

This is not yet an emergency but it will become one if it keeps up. Each of your servers is limited to two SSDs. The infrastructure guys wanted to keep SKU sprawl to a minimum, and most of the CPU cycles are used for computation anyway, so they decided to “right size” the storage on each server to two SSDs per node. You crunch some numbers and realize that the data store is going to stop growing and stabilize — exactly 100GB after it exhausts all available space on your servers. You curse and roll back the code until they can install more SSDs, sometime next decade.

Wouldn’t it have been nice if there was no limitation on the amount of storage your application could use, while still enjoying the benefits of direct attached SSDs? Enter LightOS, coming soon from Lightbits Labs.

February 28, 2019

Data center vignettes #2

It’s Thursday. The weekend is near. But you may not be able to enjoy it. The word has come down from above with the final requirements for next year’s Cassandra cluster’s growth. It’s going to have to grow by a lot. We’re talking seven if not eight figures. Not uptime or nodes. Dollars. Greenbacks. You’re going to have to invest a few millions, maybe tens of millions, in a new storage backend for the Cassandra cluster, because what you have right now is already bursting at the seams. When you built the cluster on direct attached storage, it made sense. That’s what all the cool kids were doing. The one true way to build a cloud — just stick the SSDs into the compute nodes.

As the work week draws to a close, you realize that you’re going to have to think different. To think hyperscale. And hyperscale means storage disaggregation. If it’s good enough for AWS, Facebook, and all those other guys, it has got to be good enough for your Cassandra cluster. But you don’t have their engineering teams or scale (yet). where are you going to find a disaggregated storage solution that fits your needs, runs on your servers, with your SSDs, and your existing data center network?

Rest easy and enjoy the weekend, friend. Lightbits LightOS is coming soon and it delivers exactly what you need.